Computer Vision for the Blind

Inspiration

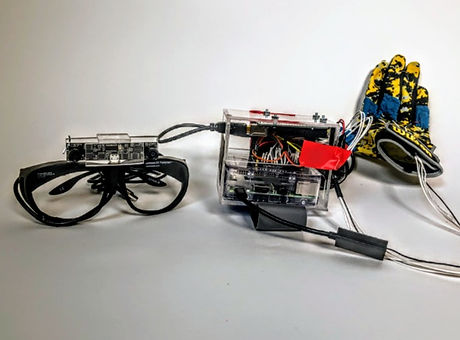

A TED talk by David Eagleman inspired us to develop a sensory substitution device for the visually impaired. In this talk David gave hearing to the deaf through a vest with vibrators on the back. We deliberated and decided to use this same concept to help the visually impaired by providing them a depth map of the word to help them navigate.

Computer Vision

I developed a python script that takes in live video stream, rectifies the images with a calibration file, and then computes the disparity in the two videos to determine the relative depth to each object in the visual field thereby creating a depth map. This python script then in real time maps the grayscale value from the depth map to vibration intensities to be felt on the glove, and then sends them through a serial connection to the Arduino.

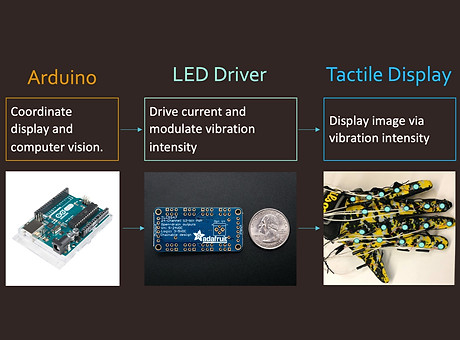

Haptic Display

The code for controlling the vibrating motor haptic display consisted of a C/C++ script run on the Arduino. The script captures the vibration intensity values sent by the python script, and then writes them through its digital port to a LED driver which is responsible for controlling the motors. Taken together, the vibrating output represents the depth map such that one feels the shape of object in the foreground vibrating more intensely than the background of the video.

Results

We tested our product by presenting realistic challenges to test subjects and demonstrating that our product can detect obstacles farther away than a cane. Through collaboration with professors, users, and industry professionals, we have developed a product that improves a blind person’s understanding of the physical world around them. Preliminary cost estimates put this device at around $300 per unit, and if priced at $600 per unit, a company selling them would go into the black in 15 months.